The Microsoft cybersecurity reference architecture will be explained by demoing key components, starting with Azure Security Center for a cross platform visibility, protection and threat detection. Then a walk through on how you can secure different Azure services covering Azure … Continue reading

Category Archives: Azure

Deploy Azure Application Gateway –Step by Step

Gallery

Azure Application Gateway is a web traffic load balancer that enables you to manage traffic to your web applications. Traditional load balancers operate at the transport layer (OSI layer 4 – TCP and UDP) and route traffic based on source … Continue reading

Move or Add a VM’s Primary NIC from one VNET to another vNet

Gallery

In this example, the powershell Cmdlets edit the VM NIC properties and change the subnet from one vNet to another vNet. Step1: Get Azure VM, NIC and Resource Group Properties. Stop-AzVM -Name “vm” -ResourceGroupName “RG01” $vm = Get-AzVm -Name “vm” … Continue reading

Prepare Windows 10 Master Image & Deploy Windows Virtual Desktop

Gallery

This gallery contains 2 photos.

Microsoft announced Windows Virtual Desktop and began a private preview. Since then, we’ve been hard at work developing the ability to scale and deliver a true multi-session Windows 10 and Office 365 ProPlus virtual desktop and app experience on any … Continue reading

Migrate Alibaba ECS VM to Azure Cloud using Azure Site Recovery Services

Gallery

In my previous blog, I have written how to migrate workloads from VMware to Azure Cloud. In this tutorial, I am going to elaborate you how to migrate Amazon Web Services (AWS) EC2 virtual machines (VMs) to Azure VMs by … Continue reading

Migrate SQL Server to Azure SQL Database using Database Migration Services (DMS)

Gallery

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that can impact database functionality in your new version of SQL Server or Azure SQL Database. The Data Migration Service (DMA) lets you … Continue reading

Build DMZ in Azure Cloud

Gallery

This gallery contains 3 photos.

Azure routes traffic between Azure, on-premises, and Internet resources. Azure automatically creates a route table for each subnet within an Azure virtual network and adds system default routes to the table. You can override some of Azure’s system routes with … Continue reading

Azure Stack Pricing Model

Gallery

This gallery contains 1 photo.

Azure Stack is sold as an integrated system, with software pre-installed on validated hardware. Azure Stack comes with two operational modes—Connected and Disconnected. Connected Mode use Azure metering services with the Microsoft Azure Cloud. The Disconnected Mode does not use … Continue reading

Amazon EC2 and Azure Virtual Machine (Instance) Comparison

Gallery

This gallery contains 1 photo.

Both Amazon EC2 and Azure VM provide a wide selection of VM types optimised to fit different use cases. An instance or VM is combinations of virtual CPU, virtual memory, temporary storage, and networking capacity and give a customer the … Continue reading

Azure AD B2B Collaboration With SharePoint Online

Gallery

This gallery contains 2 photos.

Azure AD B2B collaboration capabilities to invite guest users into your Azure AD tenant to allow them to access Azure AD service Azure AD B2B collaboration invited users can be picked from OneDrive/SharePoint Online sharing dialog boxes. OneDrive/SharePoint Online invited … Continue reading

Migrate Amazon Web Services (AWS) EC2 VM to Azure Cloud

Gallery

This gallery contains 1 photo.

In my previous blog, I have written how to migrate workloads from VMware to Azure Cloud. In this tutorial, I am going to elaborate you how to migrate Amazon Web Services (AWS) EC2 virtual machines (VMs) to Azure VMs by … Continue reading

Backup VMware Server Workloads to Azure Backup Server

Gallery

This gallery contains 1 photo.

In my previous article, I explained how to install and configure Azure Backup Server. This article explains how to configure Azure Backup Server to help protect VMware Server workloads. I am assuming that you already have Azure Backup Server installed. … Continue reading

Azure Backup Server v2

Gallery

This gallery contains 2 photos.

Azure Backup is used for backups and DR, and it works with managed disks as well as unmanaged disks. You can create a backup job with time-based backups, easy VM restoration, and backup retention policies. The following table is a … Continue reading

Migrate a SQL Server database to Azure SQL Database

Gallery

This gallery contains 1 photo.

Azure Database Migration Service partners with DMA to migrate existing on-premises SQL Server, Oracle, and MySQL databases to Azure SQL Database, Azure SQL Database Managed Instance or SQL Server on Azure virtual machines. Moving a SQL Server database … Continue reading

Migrating VMware Virtual Workloads to Microsoft Azure Cloud

Gallery

This gallery contains 3 photos.

Overview Migrating to the cloud doesn’t have to be difficult, but many organizations struggle to get started. Before they can showcase the cost benefits of moving to the cloud or determine if their workloads will lift and shift without effort, … Continue reading

Nimble Hybrid Storage for Azure VM

Gallery

Microsoft Azure can be integrated with Nimble Cloud-Connected Storage based on the Nimble Storage Predictive Flash platform via Microsoft Azure ExpressRoute or Equinix Cloud Exchange connectivity solutions. The Nimble storage is located in Equinix colocation facilities at proximity to Azure … Continue reading

EMC Unity Hybrid Storage for Azure Cloud Integration

Gallery

The customers who have placed their workload in both on-premises and cloud forming a “Hybrid Cloud” model for your Organisation, you probably need on-premises storage which meets the requirement of hybrid workloads. EMC’s Unity hybrid flash storage series may be … Continue reading

Geo-mapping using Azure Traffic Manager

Gallery

Microsoft Azure Traffic Manager allows you to control the distribution of user traffic for service endpoints in different datacenters and region. Traffic Manager support distribution of traffic for Azure VMs, Web Apps, cloud services and non-Azure endpoints. Traffic Manager uses … Continue reading

Azure Site-to-Site IPSec VPN connection with Citrix NetScaler (CloudBridge)

Gallery

This gallery contains 1 photo.

An Azure Site-to-Site VPN gateway connection is used to connect on-premises network to an Azure virtual network over an IPsec/IKE (IKEv1 or IKEv2) VPN tunnel. This type of connection requires a VPN device located on-premises that has an externally facing … Continue reading

Deploy Work Folder in Azure Cloud

Gallery

The concept of Work Folder is to store user’s data in a convenient location. User can access the work folder from BYOD and Corporate SOE from anywhere. The work folder facilitate flexible use of corporate information securely from supported devices. … Continue reading

Azure Site Recovery for VMware VMs

Gallery

Azure Site Recovery orchestrates and manages disaster recovery for Azure VMs in Azure Cloud, and on-premises VMs in VMware, System Center VMM and physical servers. Prerequisites: VMware Virtual Server Azure Subscription Azure Virtual Network ExpressRoute between On-premises to Azure Network … Continue reading

Configure Azure B2B, Azure Rights Management for on-premises SharePoint, Exchange and File server

Gallery

Azure Information Protection (Azure RMS) is an enterprise information protection solution for any organization. Azure RMS provides classification, labeling, and protection of organization’s data. Note: This deployment also enables Azure B2B access for the Published Applications in Azure AD. Azure … Continue reading

Configuring Azure ExpressRoute using PowerShell

Gallery

Microsoft Azure ExpressRoute is a private connection from on-premises networks to the Microsoft cloud over a private peering facilitated by a network service provider. With ExpressRoute, you can establish a faster, low latencies and reliable connection to Microsoft cloud services, … Continue reading

Building Multiple ADFS Farms in a Single Forest

Let’s paint a picture, you have an unique requirement to build multiple ADFS farms. you have a fully functional hybrid environment with EXO. you do not want to modify AAD connect and existing ADFS servers. But you want several SaaS applications use different ADFS farm with MFA but their identity is managed by the same Active Directory forest used by existing ADFS farm.

Here is the existing infrastructure:

- 1 single forest with multiple hybrid UPNs (domainA.com, domainB.com, domainC.com and many…)

- 2x ADFS servers (sts1.domainA.com)

- 2X WAP 2012 R2 cluster

- 1x AAD Connect

- 1X Office 365 Tenant with several federated domains (domainA.com, domainB.com, domainC.com and many….)

- 1x public CNAME sts1.domainA.com

Above configuration is working perfectly.

Now you would like to build a separate ADFS 2016 farm with WAP 2016 cluster for SaaS applications. This ADFS 2016 farm will be dedicated to authenticate these SaaS applications. you would also like to turn on MFA on ADFS 2016. Add new public authentication endpoint such as sts2.domainA.com for ADFS 2016 farm.

End goal is that once user hit https://tenant.SaaSApp.com/ it will redirect them to sts2.domain.com and prompt for on-prem AD credentials and MFA if they are accessing from public network.

New ADFS 2016 infrastructure in the same forest and domain:

- 2X ADFS 2016 Servers (sts2.domainA.com)

- 2X WAP 2016 Servers

- 1 X separate public IP for sts2.domainA.com

- 1X public CNAME for sts2.domainA.com

- 1X Private CNAME for sts2.domainA.com

Important Note: You have to prepare Active Directory schema to use ADFS 2016 functional level. No action/tasks necessary in existing ADFS 2012 R2 environment.

Guidelines and referrals to build new environment.

Upgrading AD FS to Windows Server 2016 FBL

ADFS 4.0 Step by Step Guide: Federating with Workday

Branding and Customizing the ADFS Sign-in Pages

Deploy Web Application Proxy Role in Windows Server 2012 R2 –Part I

Deploy Web Application Proxy Role in Windows Server 2012 R2 –Part II

Upgrading AD FS to Windows Server 2016 FBL

Gallery

This article will describe how to install new ADFS 2016 farm or upgrade existing AD FS Windows Server 2012 R2 farm to AD FS in Windows Server 2016. Prerequisites: ADFS Role in Windows Server 2016 Administrative privilege in both ADFS … Continue reading

ADFS 4.0 Step by Step Guide: Federating with Workday

Gallery

This article provides step by step guidelines to implement single sign on using ADFS 4.0 as the identity provider and Workday as the identifier and service provider. Important Note: Workday does not provide a service provider metadata XML file to … Continue reading

Create Azure Internal Load Balancer using PowerShell

Gallery

Input Parameters: Subnets: Subnet_10.x.x.x Resource Groups (Service Name): ServerGroup1 VMs: Server1, Server2 InternalLoadBalancerName: InternalLB1 Port: 443 Find the Subnets where you would like to create a internal load balancer. Get-AzureVNetSite Find the VMs which you would like to add to … Continue reading

Windows Server 2016 Hyper-V VS VMware vSphere 6

Gallery

Windows Server 2016 Hyper-V vs. VMware vSphere 6 – Virtualization Comparison

Configuring EMC DD Boost with Veeam Availability Suite

Gallery

This gallery contains 2 photos.

This article provides a tour of the configuration steps required to integrate EMC Data Domain System with Veeam Availability Suite 9 as well as provides benefits of using EMC DD Boost for backup application. Data Domain Boost (DD Boost) software … Continue reading

Microsoft Software Defined Storage AKA Scale-out File Server (SOFS)

Business Challenges:

- $/IOPS and $/TB

- Continuous Availability

- Fault Tolerance

- Storage Performance

- Segregation of production, development and disaster recovery storage

- De-duplication of unstructured data

- Segregation of data between production site and disaster recovery site

- Continuous break fix of Distributed File Systems (DFS) & File Server

- Continuously extending storage on the DFS servers

- Single point of failure

- File systems is not available always

- Security of file systems is constant concern

- Propitiatory non-scalable storage

- Management of physical storage

- Vendor lock-in contract for physical storage

- Migration path from single vendor to multi vendor storage provider

- Management overhead of unstructured data

- Comprehensive management of storage platform

Solutions:

Microsoft Software Defined Storage AKA Scale-Out File Server is a feature that is designed to provide scale-out file shares that are continuously available for file-based server application storage. Scale-out file shares provides the ability to share the same folder from multiple nodes of the same cluster.Microsoft Software Defined Storage offerings compared with third party offering:

| Storage feature | Third-party NAS/SAN | Microsoft Software-Defined Storage |

| Fabric | Block protocol

|

File protocol Network

|

| Network | Low latency network with FC

|

Low latency with SMB3Direct Management

|

| Management | Management of LUNs

|

Management of file shares Data de-duplication

|

| Data De-duplication | Data de-duplication

|

Data de-duplication Resiliency

|

| Resiliency | RAID resiliency groups

|

Flexible resiliency options Pooling

|

| Pooling | Pooling of disks

|

Pooling of disks Availability

|

| Availability | High

|

Continuous (via redundancy) Copy offload, Snapshots

|

| Copy Offloads, Snapshots | Copy offload, Snapshots

|

SMB copy offload, Snapshots Tiering

|

| Tiering | Storage tiering

|

Performance with tiering Persistent write-back cache

|

| Persistent Write-back cache | Persistent write-back cache

|

Persistent write-back cache Scale up

|

| Scale up | Scale up

|

Automatic scale-out rebalancing Storage Quality of Service (QoS)

|

| Storage Quality of Service (QoS) | Storage QoS

|

Storage QoS (Windows Server 2016) Replication

|

| Replication | Replication

|

Storage Replica (Windows Server 2016) Updates

|

| Updates | Firmware updates

|

Rolling cluster upgrades (Windows Server 2016)

|

| Storage Spaces Direct (Windows Server 2016)

|

||

| Azure-consistent storage (Windows Server 2016)

|

Functional use of Microsoft Scale-Out File Servers:

1. Application Workloads

- Microsoft Hyper-v Cluster

- Microsoft SQL Server Cluster

- Microsoft SharePoint

- Microsoft Exchange Server

- Microsoft Dynamics

- Microsoft System Center DPM Storage Target

- Veeam Backup Repository

2. Disaster Recovery Solution

- Backup Target

- Object storage

- Encrypted storage target

- Hyper-v Replica

- System Center DPM

3. Unstructured Data

- Continuously Available File Shares

- DFS Namespace folder target server

- Microsoft Data de-duplication

- Roaming user Profiles

- Home Directories

- Citrix User Profiles

- Outlook Cached location for Citrix XenApp Session Server

4. Management

- Single Management Point for all Scale-out File Servers

- Provide wizard driven tools for storage related tasks

- Integrated with Microsoft System Center

Business Values:

- Scalability

- Load balancing

- Fault tolerance

- Ease of installation

- Ease of management/operations

- Flexibility

- Security

- High performance

- Compliance & Certification

SOFS Architecture:

Microsoft Scale-out File Server (SOFS) is considered as a Storage Defined Storage (SDS). Microsoft SOFS is independent of hardware vendor as long as the compute and storage is certified by Microsoft Corporation. The following figure shows Microsoft Hyper-v cluster, SQL Cluster and Object Storage on the SOFS.

Figure: Microsoft Software Defined Storage (SDS) Architecture

Figure: Microsoft Scale-out File Server (SOFS) Architecture

Figure: Microsoft SDS Components

Figure: Unified Storage Management (See Reference)

Microsoft Software Defined Storage AKA Scale-out File Server Benefits:

SOFS:

- Continuous availability file stores for Hyper-V and SQL Server

- Load-balanced IO across all nodes

- Distributed access across all nodes

- VSS support

- Transparent failover and client redirection

- Continuous availability at a share level versus a server level

De-duplication:

- Identifies duplicate chunks of data and only stores one copy

- Provides up to 90% reduction in storage required for OS VHD files

- Reduces CPU and Memory pressure

- Offers excellent reliability and integrity

- Outperforms Single Instance Storage (SIS) or NTFS compression.

SMB Multichannel

- Automatic detection of SMB Multi-Path networks

- Resilience against path failures

- Transparent failover with recovery

- Improved throughput

- Automatic configuration with little administrative overhead

SMB Direct:

- The Microsoft implementation of RDMA.

- The ability to direct data transfers from a storage location to an application.

- Higher performance and lower latency through CPU offloading

- High-speed network utilization (including InfiniBand and iWARP)

- Remote storage at the speed of local storage

- A transfer rate of approximately 50Gbps on a single NIC port

- Compatibility with SMB Multichannel for load balancing and failover

VHDX Virtual Disk:

- Online VHDX Resize

- Storage QoS (Quality of Service)

Live Migration

- Easy migration of virtual machine into a cluster while the virtual machine is running

- Improved virtual machine mobility

- Flexible placement of virtual machine storage based on demand

- Migration of virtual machine storage to shared storage without downtime

Storage Protocol:

- SAN discovery (FCP, SAS, iSCSI i.e. EMC VNX, EMC VMAX)

- NAS discovery (Self-contained NAS, NAS Head i.e. NetApp OnTap)

- File Server Discovery (Microsoft Scale-Out File Server, Unified Storage)

Unified Management:

- A new architecture provides ~10x faster disk/partition enumeration operations

- Remote and cluster-awareness capabilities

- SM-API exposes new Windows Server 2012 R2 features (Tiering, Write-back cache, and so on)

- SM-API features added to System Center VMM

- End-to-end storage high availability space provisioning in minutes in VMM console

- More Windows PowerShell

ReFS:

- More resilience to power failures

- Highest levels of system availability

- Larger volumes with better durability

- Scalable to petabyte size volumes

Storage Replica:

- Hardware agnostic storage configuration

- Provide a DR solution for planned and unplanned outages of mission critical workloads.

- Use SMB3 transport with proven reliability, scalability, and performance.

- Stretched failover clusters within metropolitan distances.

- Manage end to end storage and clustering for Hyper-V, Storage Replica, Storage Spaces, Scale-Out File Server, SMB3, Deduplication, and ReFS/NTFS using Microsoft software

- Reduce downtime, and increase reliability and productivity intrinsic to Windows.

Cloud Integration:

- Cloud-based storage service for online backups

- Windows PowerShell instrumented

- Simple, reliable Disaster Recovery solution for applications and data

- Supports System Center 2012 R2 DPM

Implementing Scale-out File Server

Scale-out File Server Recommended Configuration:

- Gather all virtual servers IOPS requirements*

- Gather Applications IOPS requirements

- Total IOPS of all applications & Virtual machines must be less than available IOPS of physical storage

- Keep latency below 3 ms at all time for high performance

- Gather required capacity + potential growth + best practice

- N+1 Compute, Network and Storage Hardware

- Use low latency, high throughput networks

- Segregate storage network from data network using logical network (VLAN) or fibre channel

- Tools to be used

- Veeam One for Capacity Planning & Bottleneck findings

- Disk RAID and IOPS Calculator

- RAID Performance Calculator

- RAID Size Calculator

*Not all virtual servers are same, DHCP server generate few IOPS, SQL server and Exchange can generate thousands of IOPS.

*Do not place SQL Server on the same logical volume (LUN) with Exchange Server or Microsoft Dynamics or Backup Server.

*Isolate high IO workloads to separate logical volume or even separate storage pool if possible.

Prerequisites for Scale-Out File Server

- Install File and Storage Services server role, and the Failover Clustering feature on the cluster nodes

- Configure Microsoft failover Clusters using this article Windows Server 2012: Failover Clustering Deep Dive Part II

- Add Cluster Share Volume

- Log on to the server as a member of the local Administrators group.

- Open Server Manager> Click Tools, and then click Failover Cluster Manager.

- Click Storage, right-click the disk that you want to add to the cluster shared volume, and then click Add to Cluster Shared Volumes> Add Storage Presented to this cluster.

Configure Scale-out File Server

- Open Failover Cluster Manager> Right-click the name of the cluster, and then click Configure Role.

- On the Before You Begin page, click Next.

- On the Select Role page, click File Server, and then click Next.

- On the File Server Type page, select the Scale-Out File Server for application data option, and then click Next.

- On the Client Access Point page, in the Name box, type a NETBIOS of Scale-Out File Server, and then click Next.

- On the Confirmation page, confirm your settings, and then click Next.

- On the Summary page, click Finish.

Create Continuously Available File Share

- Open Failover Cluster Manager>Expand the cluster, and then click Roles.

- Right-click the file server role, and then click Add File Share.

- On the Select the profile for this share page, click SMB Share – Applications, and then click Next.

- On the Select the server and path for this share page, click the name of the cluster shared volume, and then click Next.

- On the Specify share name page, in the Share name box, type a name for the file share, and then click Next.

- On the Configure share settings page, ensure that the Continuously Available check box is selected, and then click Next.

- On the Specify permissions to control access page, click Customize permissions, grant the following permissions, and then click Next:

- To use Scale-Out File Server file share for Hyper-V: All Hyper-V computer accounts, the SYSTEM account, cluster computer account for any Hyper-V clusters, and all Hyper-V administrators must be granted full control on the share and the file system.

- To use Scale-Out File Server on Microsoft SQL Server: The SQL Server service account must be granted full control on the share and the file system

8. On the Confirm selections page, click Create. On the View results page, click Close.

Use SOFS for Hyper-v Server VHDX Store:

- Open Hyper-V Manager. Click Start, and then click Hyper-V Manager.

- Open Hyper-v Settings> Virtual Hard Disks> Specify Location of Store as \\SOFS\VHDShare\ and Specify location of Virtual Machine Configuration \\SOFS\VHDCShare

- Click Ok.

Use SOFS in System Center VMM:

Use SOFS for SQL Database Store:

1. Assign SQL Service Account Full permission to SOFS Share

- Open Windows Explorer and navigate to the scale-out file share.

- Right-click the folder, and then click Properties.

- Click the Sharing tab, click Advanced Sharing, and then click Permissions.

- Ensure that the SQL Server service account has full-control permissions.

- Click OK twice.

- Click the Security tab. Ensure that the SQL Server service account has full-control permissions.

2. In SQL Server 2012, you can choose to store all database files in a scale-out file share during installation.

3. On the step 20 of SQL Setup Wizard , provide a location of Scale-out File Server which is \\SOFS\SQLData and \\SOFS\SQLLogs

4. Create a Database on SOFS Share but on the existing SQL Server using SQL Script

CREATE DATABASE [TestDB]

ON PRIMARY

( NAME = N’TestDB’, FILENAME = N’\\SOFS\SQLDB\TestDB.mdf’ )

LOG ON

( NAME = N’TestDBLog’, FILENAME = N’\\SOFS\SQLDBLog\TestDBLogs.ldf’)

GO

Use Backup & Recovery:

System Center Data Protection Manager 2012 R2

Configure and add a dedupe storage target into DPM 2012 R2. DPM 2012 R2 will not backup SOFS itself but it will backup VHDX files stored on SOFS. Follow Deduplicate DPM storage and protection for virtual machines with SMB storage guide to backup virtual machines.

Veeam Availability Suite

- Log on to Veeam Availability Console>Click Backup Repository> Right Click New backup Repository

- Select Shared Folder on the Type Tab

- Add SMB Backup Target \\SOFS\Repository

- Follow the Wizard. Make Sure Service Account of Veeam has full access permission to \\SOFS\Repository Share.

- Click Scale-out Repositories>Right Click Add Scale-out backup repository> Type the Name

- Select the backup repository you created in previous>Follow the Wizard to complete tasks.

References:

Microsoft Storage Architecture

Storage Spaces Physical Disk Validation Script

Validate Hardware

Deploy Clustered Storage Spaces

Storage Spaces Tiering in Windows Server 2012 R2

SMB Transparent Failover

Cluster Shared Volume (CSV) Inside Out

Storage Spaces – Designing for Performance

Related Articles:

Scale-Out File Server Cluster using Azure VMs

Microsoft Multi-Site Failover Cluster for DR & Business Continuity

Microsoft Multi-Site Failover Cluster for DR & Business Continuity

Not every organisation looses millions of dollar per second but some does. An organisation may not loose millions of dollar per second but consider customer service and reputation are number one priority. These type of business wants their workflow to be seamless and downtime free. This article is for them who consider business continuity equals money well spent. Here is how it is done:

Multi-Site Failover Cluster

Microsoft Multi-Site Failover Cluster is a group of Clustered Nodes distribution through multiple sites in a region or separate region connected with low latency network and storage. As per the diagram illustrated below, Data Center A Cluster Nodes are connected to a local SAN Storage, while replicated to a SAN Storage on the Data Center B. Replication is taken care by a identical software defined storage on each site. Software defined storage will replicate volumes or Logical Unit Number (LUN) from primary site in this example Data Center A to Disaster Recovery Site B. Microsoft Failover cluster is configured with pass-through storage i.e. volumes and these volumes are replication to DR site. In the Primary and DR sites, physical network is configured using Cisco Nexus 7000. Data network and virtual machine network are logically segregated in Microsoft System Center VMM and physical switch using virtual local area network or VLAN. A separate Storage Area Network (SAN) is created in each site with low latency storage. Volumes of pass-through storage are replicated to DR site using identical size of volumes.

Figure: Highly Available Multi-site Cluster

Figure: Software Defined Storage in Each Site

Design Components of Storage:

- SAN to SAN replication must be configured correctly

- Initial must be complete before Failover Cluster is configured

- MPIO software must be installed on the cluster Nodes (N1, N2…N6)

- Physical and logical multipathing must be configured

- If Storage is presented directly to virtual machines or cluster nodes then NPIV must configured on the Fabric Zones.

- All Storage and Fabric Firmware must up to date with manufacturer latest software

- An identical software defined storage must be used on the both sites

- If a third party software is used to replicate storage between sites then storage vendor must be consulted before the replication.

Further Reading:

Understanding Software Defined Storage (SDS)

How to configure SAN replication between IBM Storwize V3700 systems

Install and Configure IBM V3700, Brocade 300B Fabric and ESXi Host Step by Step

Application Scale-out File Systems

Design Components of Network:

- Isolate management, virtual and data network using VLAN

- Use a reliable IPVPN or Fibre optic provider for the replication over the network

- Eliminate all single point of failure from all network components

- Consider stretched VLAN for multiple sites

Further Reading:

Understanding Network Virtualization in SCVMM 2012 R2

Understanding VLAN, Trunk, NIC Teaming, Virtual Switch Configuration in Hyper-v Server 2012 R2

Design failover Cluster Quorum

- Use Node & File Share Witness (FSW) Quorum for even number of Cluster Nodes

- Connect File Share Witness on to the third Site

- Do not host File Share Witness on a virtual machine on same site

- Alternatively use Dynamic Quorum

Further Reading:

Understanding Dynamic Quorum in a Microsoft Failover Cluster

Design of Compute

- Use reputed vendor to supply compute hardware compatible with Microsoft Hyper-v

- Make sure all latest firmware updates are applied to Hyper-v host

- Make manufacture provide you with latest HBA software to be installed on Hyper-v host

Further Reading:

Windows Server 2012: Failover Clustering Deep Dive Part II

Implementing a Multi-Site Failover Cluster

Step1: Prepare Network, Storage and Compute

Understanding Network Virtualization in SCVMM 2012 R2

Understanding VLAN, Trunk, NIC Teaming, Virtual Switch Configuration in Hyper-v Server 2012 R2

Install and Configure IBM V3700, Brocade 300B Fabric and ESXi Host Step by Step

Step2: Configure Failover Cluster on Each Site

Windows Server 2012: Failover Clustering Deep Dive Part II

Understanding Dynamic Quorum in a Microsoft Failover Cluster

Multi-Site Clustering & Disaster Recovery

Step3: Replicate Volumes

How to configure SAN replication between IBM Storwize V3700 systems

How to create a Microsoft Multi-Site cluster with IBM Storwize replication

Use Cases:

Use case can be determined by current workloads and future workloads plus business continuity. Deploy Veeam One to determine current workloads on your infrastructure and propose a future workload plus business continuity. Here is a list of use cases of multi-site cluster.

-

Scale-Out File Server for application data- To store server application data, such as Hyper-V virtual machine files, on file shares, and obtain a similar level of reliability, availability, manageability, and high performance that you would expect from a storage area network. All file shares are simultaneously online on all nodes. File shares associated with this type of clustered file server are called scale-out file shares. This is sometimes referred to as active-active.

-

File Server for general use – This type of clustered file server, and therefore all the shares associated with the clustered file server, is online on one node at a time. This is sometimes referred to as active-passive or dual-active. File shares associated with this type of clustered file server are called clustered file shares.

-

DFS Replication Namespace for Unstructured Data i.e. user profile, home drive, Citrix profile

Multi-Site Hyper-v Cluster for High Availability and Disaster Recovery

Gallery

This gallery contains 4 photos.

In most of the SMB customer, the nodes of the cluster that reside at their primary data center provide access to the clustered service or application, with failover occurring only between clustered nodes. However for an enterprise customer, failure of … Continue reading

Why Managed vCenter Provider cannot be called Cloud Provider?

Gallery

This gallery contains 2 photos.

Before I answer the question of the title of this article, let’s start with what is public cloud and how a public cloud can be defined. In cloud computing, the word cloud (also phrased as “the cloud”) is used as … Continue reading

Manage Remote Workgroup Hyper-V Hosts with Hyper-V Manager

Gallery

This gallery contains 2 photos.

The following procedures are tested on Windows Server 2016 TP4 and Windows 10 Computer. Step1: Basic Hyper-v Host Configuration Once Hyper Server 2016 is installed. Log on to Hyper-v host as administrator. You will be presented with command prompt. On … Continue reading

Understanding Software Defined Storage (SDS)

Software defined storage is an evolution of storage technology in cloud era. It is a deployment of storage technology without any dependencies on storage hardware. Software defined storage (SDS) eliminates all traditional aspect of storage such as managing storage policy, security, provisioning, upgrading and scaling of storage without the headache of hardware layer. Software defined storage (SDS) is completely software based product instead of hardware based product. A software defined storage must have the following characteristics.

Characteristics of SDS

- Management of complete stack of storage using software

- Automation-policy driven storage provisioning with SLA

- Ability to run private, public or hybrid cloud platform

- Creation of uses metric and billing in control panel

- Logical storage services and capabilities eliminating dependence on the underlying physical storage systems

- Creation of logical storage pool

- Creation of logical tiering of storage volumes

- Aggregate various physical storage into one or multiple logical pool

- Storage virtualization

- Thin provisioning of volume from logical pool of storage

- Scale out storage architecture such as Microsoft Scale out File Servers

- Virtual volumes (vVols), a proposal from VMware for a more transparent mapping between large volumes and the VM disk images within them

- Parallel NFS (pNFS), a specific implementation which evolved within the NFS

- OpenStack APIs for storage interaction which have been applied to open-source projects as well as to vendor products.

- Independent of underlying storage hardware

A software defined storage must not have the following limitations.

- Glorified hardware which juggle between network and disk e.g. Dell Compellent

- Dependent systems between hardware and software e.g. Dell Compellent

- High latency and low IOPS for production VMs

- Active-passive management controller

- Repetitive hardware and software maintenance

- Administrative and management overhead

- Cost of retaining hardware and software e.g. life cycle management

- Factory defined limitation e.g. can’t do situation

- Production downtime for maintenance work e.g. Dell Compellent maintenance

The following vendors provides various software defined storage in current market.

Software Only vendor

- Atlantis Computing

- DataCore Software

- SANBOLIC

- Nexenta

- Maxta

- CloudByte

- VMware

- Microsoft

Mainstream Storage vendor

- EMC ViPR

- HP StoreVirtual

- Hitachi

- IBM SmartCloud Virtual Storage Center

- NetApp Data ONTAP

Storage Appliance vendor

- Tintri

- Nimble

- Solidfire

- Nutanix

- Zadara Storage

Hyper Converged Appliance

- Cisco (Starting price from $59K for Hyperflex systems+1 year support inclusive)

- Nutanix

- VCE (Starting price from $60K for RXRAIL systems+support)

- Simplivity Corporation

- Maxta

- Pivot3 Inc.

- Scale Computing Inc

- EMC Corporation

- VMware Inc

Ultimately, SDS should and will provide businesses will worry free management of storage without limitation of hardware. There are compelling use cases of software defined storage for an enterprise to adopt software defined storage.

Relavent Articles

- Gartner’s verdict on mid-range and enterprise class storage arrays

- Buying a SAN? How to select a SAN for your business?

- Dell Compellent: A Poor Man’s SAN

- Dell Compellent Storage to be discontinued after Dell-EMC merger

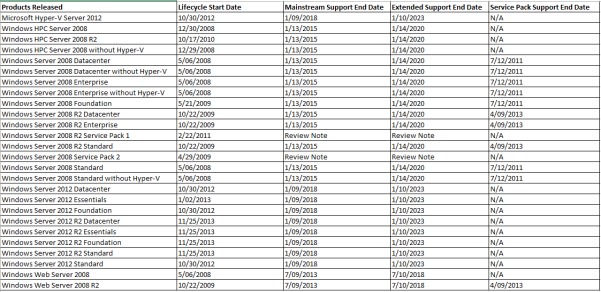

Windows Server Product Life Cycle

VMware Increases Price Again

Gallery

VMware increases price again. As per VMware pricing FAQ, the following pricing model will be in effect on April 1, 2016. vSphere with Operations Management Enterprise Plus from US$4,245/CPU to US$4,395/CPU VMware vCenter Server™ Standard from US$4,995/Instance to US$5,995/Instance vSphere … Continue reading

Understanding Software Defined Networking (SDN) and Network Virtualization

Gallery

The evolution of virtualization lead to an evolution of wide range of virtualized technology including the key building block of a data center which is Network. A traditional network used be wired connection of physical switches and devices. A network … Continue reading

Comparing VMware vSwitch with SCVMM Network Virtualization

Gallery

Feature VMware vSphere System Center VMM 2012 R2 Standard vSwitch DV Switch Switch Features Yes Yes Yes Layer 2 Forwarding Yes Yes Yes IEEE 802.1Q VLAN Tagging Yes Yes Yes Multicast Support Yes Yes Yes Network Policy – Yes Yes … Continue reading

Understanding Network Virtualization in SCVMM 2012 R2

Gallery

This gallery contains 4 photos.

Networking in SCVMM is a communication mechanism to and from SCVMM Server, Hyper-v Hosts, Hyper-v Cluster, virtual machines, application, services, physical switches, load balancer and third party hypervisor. Functionality includes: Logical Networking of almost “Anything” hosted in SCVMM- Logical network … Continue reading

How to implement hardware load balancer in SCVMM

Gallery

The following procedure describe Network Load Balancing functionality in Microsoft SCVMM. Microsoft native NLB is automatically included into SCVMM when you install SCVMM. This procedure describe how to install and configure third party load balancer in SCVMM. Prerequisites: Microsoft System … Continue reading

Cisco Nexus 1000V Switch for Microsoft Hyper-V

Gallery

This gallery contains 6 photos.

Cisco Nexus 1000V Switch for Microsoft Hyper-V provides following advanced feature in Microsoft Hyper-v and SCVMM. Integrate physical, virtual, and mixed environments Allow dynamic policy provisioning and mobility-aware network policies Improves security through integrated virtual services and advanced Cisco NX-OS … Continue reading

Dell Compellent: A Poor Man’s SAN

Gallery

I have been deploying Storage Area Network for almost ten years in my 18 years Information Technology career. I have deployed various traditional, software defined and converged SANs manufactured by a global vendor like IBM, EMC, NetApp, HP, Dell, etc. … Continue reading

Understand “X as a Service” or get stuck in “Pizza box as a Service”

Gallery

“X or Anything as a Service” is an acronym used by many cloud provider and offering almost end to end services to a business. The most traditional use of “X” are Software as a Service (XaaS), Infrastructure as a Service … Continue reading

Dell Compellent Storage to be discontinued after Dell-EMC merger

DELL is buying EMC. This is an old news. You already know this. There are many business reasons Dell is buying EMC. EMC is the number one storage vendor and a big cat of NASDAQ. One key business justification is to get into Enterprise market with enterprise class product lines and second big reason is break into cloud market utilising dominant presence of EMC. Have you rationalised your opinion on what Dell storage product line likely to be once merger is complete. There are many argument in for and against of various combination of storage line Dell will come up. Let’s look at current product lines of Dell and EMC.

Dell Current Product Line:

- Network Attached storage based on Dell 2U rack servers.

- Direct Attached Storage

- iSCSI and FCoE SAN solution such as PowerVault MD, EqualLogic, Compellent

EMC Product Line:

- EMC XtremIO – the XtremIO all-flash array—ideal for virtual desktop infrastructure (VDI), virtual server, and database

- EMC VMAX enterprise class storage- Mission critical storage for hyper consolidation and delivering IT as a service.

- EMC VNX/VNXe – hybrid flash storage platform, optimized for virtual applications.

Software Defined Storage

Dell

- Software defined storage such as Dell XC Series powered by Nutanix

EMC

- EMC Isilon – High-performance, clustered network-attached storage (NAS) that scales to your performance and capacity requirements.

- EMC ScaleIO – Hyper-converged solution that uses your existing servers, and turns them into a software defined SAN with massive scalability, 10X better performance and 60% lower cost than traditional storage.

- EMC Elastic Cloud Storage (ECS) – Cloud-scale, geo-federated namespace, multi-tenant security and in-place analytics ECS is the universal platform

- EMC ViPR Controller – deliver automation and management insights from your multivendor storage.

- EMC Service Assurance Suite – Service Assurance Suite delivers service-aware software defined network management that optimizes your physical and virtual networks, increases operational efficiency by ensuring SLA’s, and reduces cost by maximizing resources.

- EMC ViPR SRM – optimize your multivendor block, file & object storage tiers to application service levels you’ll maximize resources, reduce costs and improve your return on investment.

Other Partnership and Products of EMC

EMC Vblock Systems – VCE is a technology partnership which EMC plays major role to deliver converged cloud solutions for midrange to enterprise client. Converged Infrastructure technology that provide virtualization, server, network, storage and backup, VCE Converged Solutions simplify all aspects of IT.

EMC Hybrid Cloud- Federation Enterprise Hybrid Cloud for delivering IT-as-a-Service. With thousands of engineering hours, the Federation brings together best-in-class components from EMC, VCE, and VMware to create a fully integrated, enterprise-ready solution.

VMware Partnerships-EMC Corp plans to keep its majority stake in VMware Inc. EMC, which owns about 80% of VMware, bought the company in 2004 for $700 million. VMware accounted for about 22 percent of EMC’s revenue of $23.2 billion in 2013. EMC and VMware share a cloud vision. Through joint product development, solutions, and services, EMC is the number one choice for VMware customers for storage, backup, security, and management solutions.

RSA Information Security division- RSA info security offers data protection and identity management.

Pivotal- EMC and VMware partnership to manufacture software and big data solutions.

Virtustream- EMC and VMware joint $1.2B acquisition of this brand to provide public cloud services.

Dell to discontinue Compellent after merger–make sense

There are too many eggs on the busket already. Would Dell continue to sell identical products in different name or stream line all products. Dell is after streamlining all products. It is well known by Dell loyal customer that EqualLogic will disappear from Dell product line at 2018. We learnt that in Dell partner’s conference. Then question will remain what will happen to Compellent? In current market place, VNX directly compete with Dell Compellent. But VNX has more customer base than Compellent. VNX is in market for almost 20 years and still growing fast. Compellent is in new shape and in market with SC series product line. But Compellent has frustrated its customer with poor performance and poor customer support. A very poor explanation and guidelines from Dell presales team on how to align Dell storage with business requirement.

Dell can offer customer will both VNX and Compellent knowing Compellent did not work from the beginning or Dell streamline its product and kill Compellent all together. Then promote VNX as it worked past 20 years and has a proven track record. Killing Compellent will disturb few already unhappy customer who simply wanted cheap SATA disks. But killing VNX will disturb wide range of customers and annoy them once and for all. Consequence of that would be losing customers to HP and NetApp which dell desperately wants to avoid and gain control of storage market. This way Dell-EMC will retain undisputed title of EMC as a number one storage vendor. This make sense for any non IT savvy walking on the street. I am certain and believe that Dell will discontinue Compellent serries all together. Protecting $67 billion dollar acquisition of EMC is more important than protecting $960 million acquisition of Compellent. It would obviously make sense for Michael Dell to kill Compellent and promote VNX as a sole mid range storage.

References:

Dell Compellent: A Poor Man’s SAN

EMC plans to keep stake in VMware, despite investor pressure: source

Windows Client OS Life Cycle

End of Support of Windows Client Operating Systems

End of Sales of Windows Client Operating Systems

Reference: Windows lifecycle fact sheet

Forrester Reaserch Rates Server Hosted Virtual Desktop

Gallery

This gallery contains 4 photos.

Forrester Research Inc evaluates and rates server hosted virtual desktops. Forrester identified seven contenders in desktop virtualization platform. The following are the outcome of Forrester Research on VDI providers. Product Evaluated: Citrix XenDesktop 7.6 Wyse vWorkspace 8.5 Listed BoXedVDI 3.2.1 … Continue reading

Veeam integrate with EMC and NetApp Storage Snapshots!

Taking a VMware snapshots and Hyper-v checkpoint can produce a serious workload on VM performance, and it can take considerable effort by sys admin to overcome this technical challenge and meet the required service level agreement. Most Veeam user will run their backup and replication after hours considering impact to the production environment, but this can’t be your only backup solution. What if storage itself goes down, or gets corrupted? Even with storage-based replication, you need to take your data out of the single fault domain. This is why many customers prefer to additionally make true backups stored on different storage. Never to store production and backup on to a same storage.

Source: Veeam

Now you can take advantage of storage snapshot. Veeam decided to work with storage vendor such as EMC and NetApp to integrate production storage, leveraging storage snapshot functionality to reduce the impact on the environment from snapshot/checkpoint removal during backup and replication.

Supported Storage

- EMC VNX/VNXe

- NetApp FAS

- NetApp FlexArray (V-Series)

- NetApp Data ONTAP Edge VSA

- HP 3PAR StoreServ

- HP StoreVirtual

- HP StoreVirtual VSA

- IBM N series

Unsupported Storage

- Dell Compellent

NOTE: My own experience with HP StoreVirtual and HP 3PAR are awful. I had to remove HP StoreVirtual from production store and introduce other fibre channel to cope with workload. Even though Veeam tested snapshot mechanism with HP, I would recommend avoid HP StoreVirtual if you have high IO workload.

Benefits

Veeam suggest that you can get lower RPOs and lower RTOs with Backup from Storage Snapshots and Veeam Explorer for Storage Snapshots.

Veeam and EMC together allow you to:

- Minimize impact on production VMs

- Rapidly create backups from EMC VNX or VNXe storage snapshots up to 20 times faster than the competition

- Easily recover individual items in two minutes or less, without staging or intermediate steps

As a result of integrating Veeam with EMC, you can backup 20 times faster and restore faster using Veeam Explorer. Hence users can achieve much lower RPOs (recovery point objectives) and lower RTOs (recovery time objectives) with minimal impact on production VMs.

How it works

Veeam Backup & Replication works with EMC and NetApp storage, along with VMware to create backups and replicas from storage snapshots in the following way.

Source: Veeam

The backup and replication job:

- Analyzes which VMs in the job have disks on supported storage.

- Triggers a vSphere snapshot for all VMs located on the same storage volume. (As a part of a vSphere snapshot, Veeam’s application-aware processing of each VM is performed normally.)

- Triggers a snapshot of said storage volume once all VM snapshots have been created.

- Retrieves the CBT information for VM snapshots created on step 2.

- Immediately triggers the removal of the vSphere snapshots on the production VMs.

- Mounts the storage snapshot to one of the backup proxies connected into the storage fabric.

- Reads new and changed virtual disk data blocks directly from the storage snapshot and transports them to the backup repository or replica VM.

- Triggers the removal storage snapshot once all VMs have been backed up.

VMs run off snapshots for the shortest possible time (Subject to storage array- EMC works better), while jobs obtain data from VM snapshot files preserved in the storage snapshot. As the result, VM snapshots do not get a chance to grow large and can be committed very quickly without overloading production storage with extended merge procedure, as is the case with classic techniques for backing up from VM snapshots.

Integration with EMC storage will bring great benefit to customers who wants to take advantage of their storage array. Veeam Availability Suite v9 will provide the chance to reduce IO on to your storage array and bring your SLA under control.

References:

Integration with emc storage snapshot

Veeam integrates with emc snapshots

New Veeam availability suite version 9

Gartner’s verdict on mid-range and enterprise class storage arrays

Previously I wrote an article on how to select a SAN based on your requirement. Let’s learn what Gartner’s verdict on Storage. Gartner scores storage arrays in mid-range and enterprise class storage. Here are details of Gartner score.

Mid-Range Storage

Mid-range storage arrays are scored on manageability, Reliability and Availability (RAS), performance, snapshot and replication, scalability, the ecosystem, multi-tenancy and security, and storage efficiency.

Figure: Product Rating

Figure: Storage Capabilities

Figure: Product Capabilities

Figure: Total Score

Enterprise Class Storage

Enterprise class storage is scored on performance, reliability, scalability, serviceability, manageability, RAS, snapshot and replication, ecosystem, multi-tenancy, security, and storage efficiency. Vendor reputation are more important in this criteria. Product types are clustered, scale-out, scale-up, high-end (monolithic) arrays and federated architectures. EMC, Hitachi, HP, Huawei, Fujitsu, DDN, and Oracle arrays can all cluster across more than 2 controllers. These vendors are providing functionality, performance, RAS and scalability to be considered in this class.

Figure: Product Ratings (Source: Gartner)

Where does Dell Compellent Stand?

There are known disadvantages in Dell Compellent storage array, users with more than two nodes must carefully plan firmware upgrades during a time of low I/O activity or during periods of planned downtime. Even though Dell Compellent advertised as flash cached, Read SSD and Write SSD with storage tiering, snapshot. In realty Dell Compellent does its own thing in background which most customer isn’t aware of. Dell Compellent run RAID scrub every day whether you like it or not which generate huge IOPS in all tiered arrays which are both SSD and SATA disks. You will experience poor IO performance during RAID scrub. When Write SSD is full Compellent controller automatically trigger an on demand storage tiering during business hour and forcing data to be written permanently in tier 3 disks which will literally kill virtualization, VDI and file systems. Storage tiering and RAID scrub will send storage latency off the roof. If you are big virtualization and VDI shop than you are left with no option but to experience this poor performance and let RAID scrub and tiering finish at snail pace. If you have terabytes of data to be backed up every night you will experience extended backup window, un-achievable RPO and RTO regardless of change block tracking (CBT) enabled in backup products.

If you are one of Compellent customer wondering why Garner didn’t include Dell Compellent in Enterprise class. Now you know why Dell Compellent is excluded in enterprise class matrix as Dell Compellent doesn’t fit into the functionality and capability requirement to be considered as enterprise class. There is another factor that may worry existing Dell EqualLogic customer as there is no direct migration path and upgrade path have been communicated with on premises storage customers once OEM relationship between Dell and EMC ends. Dell pro-support and partner channel confirms that Dell will no longer sell SAS drive which means IO intense array will lose storage performance. These situations put users of co-branded Dell:EMC CX systems in the difficult position of having to choose between changing changing storage system technologies or changing storage vendor all together.

Buying a SAN? How to select a SAN for your business?

A storage area network (SAN) is any high-performance network whose primary purpose is to enable storage devices to communicate with computer systems and with each other. With a SAN, the concept of a single host computer that owns data or storage isn’t meaningful. A SAN moves storage resources off the common user network and reorganizes them into an independent, high-performance network. This allows each server to access shared storage as if it were a drive directly attached to the server. When a host wants to access a storage device on the SAN, it sends out a block-based access request for the storage device.

A storage-area network is typically assembled using three principle components: cabling, host bus adapters (HBAs) and switches. Each switch and storage system on the SAN must be interconnected and the physical interconnections must support bandwidth levels that can adequately handle peak data activities.

Good SAN

A good provides the following functionality to the business.

Highly availability: A single SAN connecting all computers to all storage puts a lot of enterprise information accessibility eggs into one basket. The SAN had better be pretty indestructible or the enterprise could literally be out of business. A good SAN implementation will have built-in protection against just about any kind of failure imaginable. As we will see in later chapters, this means that not only must the links and switches composing the SAN infrastructure be able to survive component failures, but the storage devices, their interfaces to the SAN, and the computers themselves must all have built-in strategies for surviving and recovering from failures as well.

Performance:

If a SAN interconnects a lot of computers and a lot of storage, it had better be able to deliver the performance they all need to do their respective jobs simultaneously. A good SAN delivers both high data transfer rates and low I/O request latency. Moreover, the SAN’s performance must be able to grow as the organization’s information storage and processing needs grow. As with other enterprise networks, it just isn’t practical to replace a SAN very often.

On the positive side, a SAN that does scale provides an extra application performance boost by separating high-volume I/O traffic from client/server message traffic, giving each a path that is optimal for its characteristics and eliminating cross talk between them.

The investment required to implement a SAN is high, both in terms of direct capital cost and in terms of the time and energy required to learn the technology and to design, deploy, tune, and manage the SAN. Any well-managed enterprise will do a cost-benefit analysis before deciding to implement storage networking. The results of such an analysis will almost certainly indicate that the biggest payback comes from using a SAN to connect the enterprise’s most important data to the computers that run its most critical applications.

But its most critical data is the data an enterprise can least afford to be without. Together, the natural desire for maximum return on investment and the criticality of operational data lead to Rule 1 of storage networking.

Great SAN

A great SAN provides additional business benefits plus additional features depending on products and manufacturer. The features of storage networking, such as universal connectivity, high availability, high performance, and advanced function, and the benefits of storage networking that support larger organizational goals, such as reduced cost and improved quality of service.

- SAN connectivity enables the grouping of computers into cooperative clusters that can recover quickly from equipment or application failures and allow data processing to continue 24 hours a day, every day of the year.

- With long-distance storage networking, 24 × 7 access to important data can be extended across metropolitan areas and indeed, with some implementations, around the world. Not only does this help protect access to information against disasters; it can also keep primary data close to where it’s used on a round-the-clock basis.

- SANs remove high-intensity I/O traffic from the LAN used to service clients. This can sharply reduce the occurrence of unpredictable, long application response times, enabling new applications to be implemented or allowing existing distributed applications to evolve in ways that would not be possible if the LAN were also carting I/O traffic.

- A dedicated backup server on a SAN can make more frequent backups possible because it reduces the impact of backup on application servers to almost nothing. More frequent backups means more up-to-date restores that require less time to execute.

Replication and disaster recovery

With so much data stored on a SAN, your client will likely want you to build disaster recovery into the system. SANs can be set up to automatically mirror data to another site, which could be a fail safe SAN a few meters away or a disaster recovery (DR) site hundreds or thousands of miles away.

If your client wants to build mirroring into the storage area network design, one of the first considerations is whether to replicate synchronously or asynchronously. Synchronous mirroring means that as data is written to the primary SAN, each change is sent to the secondary and must be acknowledged before the next write can happen.

The alternative is to asynchronously mirror changes to the secondary site. You can configure this replication to happen as quickly as every second, or every few minutes or hours, Schulz said. While this means that your client could permanently lose some data, if the primary SAN goes down before it has a chance to copy its data to the secondary, your client should make calculations based on its recovery point objective (RPO) to determine how often it needs to mirror.

Security

With several servers able to share the same physical hardware, it should be no surprise that security plays an important role in a storage area network design. Your client will want to know that servers can only access data if they’re specifically allowed to. If your client is using iSCSI, which runs on a standard Ethernet network, it’s also crucial to make sure outside parties won’t be able to hack into the network and have raw access to the SAN.

Capacity and scalability

A good storage area network design should not only accommodate your client’s current storage needs, but it should also be scalable so that your client can upgrade the SAN as needed throughout the expected lifespan of the system. Because a SAN’s switch connects storage devices on one side and servers on the other, its number of ports can affect both storage capacity and speed, Schulz said. By allowing enough ports to support multiple, simultaneous connections to each server, switches can multiply the bandwidth to servers. On the storage device side, you should make sure you have enough ports for redundant connections to existing storage units, as well as units your client may want to add later.

Uptime and availability

Because several servers will rely on a SAN for all of their data, it’s important to make the system very reliable and eliminate any single points of failure. Most SAN hardware vendors offer redundancy within each unit — like dual power supplies, internal controllers and emergency batteries — but you should make sure that redundancy extends all the way to the server. Availability and redundancy can be extended to multiple systems and cross datacentre which comes with cost benefit analysis and specific business requirement. If your business drives to you to have zero downtime policy then data should be replicated to a disaster recovery sites using identical SAN as production. Then use appropriate software to manage those replicated SAN.

Software and Hardware Capability

A great SAN management software deliver all the capabilities of SAN hardware to the devices connected to the SAN. It’s very reasonable to expect to share a SAN-attached tape drive among several servers because tape drives are expensive and they’re only actually in use while back-ups are occurring. If a tape drive is connected to computers through a SAN, different computers could use it at different times. All the computers get backed up. The tape drive investment is used efficiently, and capital expenditure stays low.

A SAN provide fully redundant, high performance and highly available hardware, software for application and business data to compute resources. Intelligent storage also provide data movement capabilities between devices.

Best OR Cheap

No vendor has ever developed all the components required to build a complete SAN but most vendors are engaged in partnerships to qualify and offer complete SANs consisting of the partner’s products.

Best-in-class SAN provides totally different performance and attributes to business. A cheap SAN would provide a SAN using existing Ethernet network however you should ask yourself following questions and find answers to determine what you need? Best or cheap?

- Has this SAN capable of delivering business benefits?

- Has this SAN capable of managing your corporate workloads?

- Are you getting correct I/O for your workloads?

- Are you getting correct performance matrix for your application, file systems and virtual infrastructure?

- Are you getting value for money?

- Do you have a growth potential?

- Would your next data migration and software upgrade be seamless?

- Is this SAN a heterogeneous solutions for you?

Storage as a Service vs on-premises

There are many vendors who provides storage as a service with lucrative pricing model. However you should consider the following before choosing storage as a service.

- Does this vendor a partner of recognised storage manufacturer?

- Does this vendor have certified and experienced engineering team to look after your data?

- Does this vendor provide 24x7x365 support?

- Does this vendor provide true storage tiering?

- What is geographic distance between storage as a service provider’s data center and your infrastructure and how much WAN connectivity would cost you?

- What would be storage latency and I/O?

- Are you buying one off capacity or long term corporate storage solution?

If answers of these questions favour your business then I would recommend you buy storage as a service otherwise on premises is best for you.

NAS OR FC SAN OR iSCSI SAN OR Unified Storage

A NAS device provides file access to clients to which it connects using file access protocols (primarily CIFS and NFS) transported on Ethernet and TCP/IP.

A FC SAN device is a block-access (i.e. it is a disk or it emulates one or more disks) that connects to its clients using Fibre Channel and a block data access protocol such as SCSI.

An iSCSI, which stands for Internet Small Computer System Interface, works on top of the Transport Control Protocol (TCP) and allows the SCSI command to be sent end-to-end over local-area networks (LANs), wide-area networks (WANs) or the Internet.

You have to know your business before you can answer the question NAS/FC SAN/iSCSI SAN or Unified? Would you like to maximise your benefits from same investment well you know the answer you are looking for unified storage solutions like NetApp or EMC ISILON. If you are looking for enterprise class high performance storage, isolate your Ethernet from storage traffic, reduce backup time, minimise RPO and RTO then FC SAN is best for you example EMC VNX and NetApp OnCommand Cluster. If your intention is to use existing Ethernet and have a shared storage then you are looking for iSCSI SAN example Nimble storage or Dell SC series storage. But having said that you also needs to consider your structured corporate data, unstructured corporate data and application performance before making a judgement call.

Decision Making Process

Let’s make a decision matrix as follows. Just fill the blanks and see the outcome.

| Workloads | I/O | Capacity Requirement (in TB) | Storage Protocol

(FC, iSCSI, NFS, CIFS) |

| Virtualization | |||

| Unstructured Data | |||

| Structured Data | |||

| Messaging Systems | |||

| Application virtualization | |||

| Collaboration application | |||

| Business Application |

Functionality Matrix

| Option | Rating Requirement (1=high 3=Medium 5=low ) |

| Redundancy | |

| Uptime | |

| Capacity | |

| Data movement | |

| Management |

Risk Assessment

| Risk Type | Rating (Low, Medium, High) |

| Loss of productivity | |

| Loss of redundancy | |

| Reduced Capacity | |

| Uptime | |

| Limited upgrade capacity | |

| Disruptive migration path |

Service Data – SLA

| Service Type | SLA Target |

| Hardware Replacement | |

| Uptime | |

| Vendor Support |

Rate storage via Gartner Magic Quadrant. Gartner magic quadrant leaders are (as of October 2015):

- EMC

- HP

- Hitachi

- Dell

- NetApp

- IBM

- Nimble Storage

To make your decision easy select a storage that enables you to cost effective way manage large and rapidly growing data. A storage that is built for agility, simplicity and provide both tiered storage approach for specialized needs and the ability to unify all digital contents into a single high performance and highly scalable shared pool of storage. A storage that accelerate productivity and reduce capital and operational expenditures, while seamlessly scaling storage with the growth of mission critical data.